In this blog post I will describe how you can dramatically improve the performance of your PHP CMS website that you’ve hosted at a webspace provider, in this case Hosteurope. To achieve this I’m using nginx, haproxy, varnish, s3 and Cloudfront CDN.

A friend of mine is selling her designer brides dresses on her website and occasionally her business is featured on TV fashion shows. When that happened in the past, her website broke down and was basically unreachable. She called me because she was expecting to be featured on another TV show soon and this time she would like her website to be up and running, especially her web shop. Of course, there was only a few days before the broadcast, so the solution had to work fast.

From the outside it wasn’t clear why the website was unreachable when the traffic was surging in. Was the PHP of the CMS too inefficient and slow? Was the web server of the webspace provider too slow? Was the uplink saturated from all the large images and videos on her website? Because there is no way to figure that out quickly and all of those options are possible I tried to come up with a plan:

- Check if there is caching in place and if not add it to make the dynamic PHP site static, except for the online shop

- See if we can somehow dynamically add a CDN (Content Delivery Network) in the mix to serve all the large assets from other and more capable locations

It turned out that the CMS (I believe Drupal) had some sort of caching enabled but because cookies were enabled all the way and many elements in the HTML had dynamic queries in their URL, I wasn’t convinced that the caching actually had any effect.

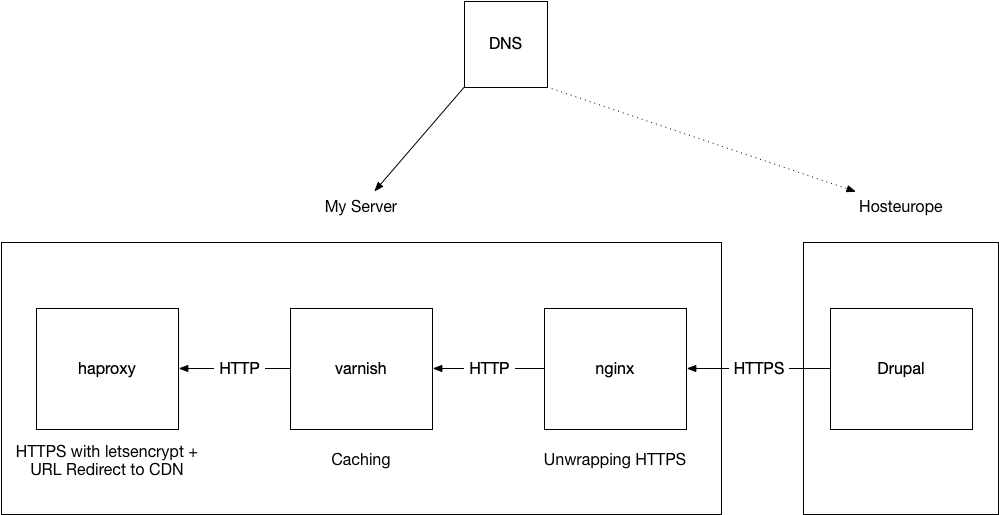

I wanted to add a caching reverse proxy in front of the website to have full control but of course that isn’t easy on a webspace provider. So I thought, maybe I could use my own server, set up varnish there and have the Hosteurope website work as its origin server. But there was another problem. The website was using HTTPS and it was not easy to disable it or download the certificates. In order to get the caching on my server to work I had to do this:

- Somehow unwrap the HTTPS/SSL

- Feed the decrypted HTTP to varnish

- Strip cookies and query parameters for the static parts of the website which do not change frequently

- Re-wrap everything in HTTPS with a letsencrypt certificate

- Point DNS to my server

This is the nginx config:

upstream remote_https_website {

server <origin_ip_address:443;

}

server {

listen 8090;

server_name www.popularwebsite.com popularwebsite.com;

location / {

proxy_pass https://remote_https_website;

proxy_set_header host www.popularwebsite.com;

}

}This is the varnish config:

vcl 4.0;

# this is nginx

backend default {

.host = "127.0.0.1";

.port = "8090";

}

# Remove cookies except for shop and admin interface

sub vcl_recv {

if (req.url ~ "(cart|user|system)") {

return (pass);

} else {

unset req.http.Cookie;

}

}

# Add caching header to see if it's working

sub vcl_deliver {

# Display hit/miss info

if (obj.hits > 0) {

set resp.http.X-Cache = "HIT";

}

else {

set resp.http.X-Cache = "MISS";

}

}After setting this up, it was time to test if that actually made things better. For these kind of quick tests, I’d like to use a tool called wrk. I modified my /etc/hosts file to point the domain to my server locally and temporarily and then fired away. This alone provided a 3x increase of requests per second, however if you start small, 3x is not that amazing. It went from ~ 18 requests per second to about 60 requests per second.

It is worth pointing out that the cross datacenter latency between my server and Hosteurope can be neglected in this scenario. Since varnish is caching most of the requests, the origin server is barely ever contacted once the cache is filled. Very quickly the statistics of of varnish showed nothing but cache hits, all served directly from RAM.

These kind of tests are always limited though. My server was still relaxed. The CPU was bored, my 1Gbit uplink not saturated and the disk with a ZFS mirror and 16GB of read cache was also not preventing more throughput. It was of course my own machine and home internet connection.

To properly simulate TV broadcast conditions, you need a distributed load test and because I didn’t have the time to set that up, I moved on to the next problem which was getting the large assets delivered from a CDN. I know from experience what my server and haproxy, varnish and nginx are capable of and I was confident, they would not buckle.

Getting the assets on a CDN wasn’t easy either as it would have meant to manually go through all pages of the website in the CMS and change each and every single one of them.

Luckily most of the asset URLs followed a consistent path structure which meant I could download the folders containing the images and videos, upload them to s3 and put the AWS Cloudfront CDN in front of it.

When a user is browsing to the website which is now essentially hosted on my server, all the referenced assets will also point to it. This means that I can rewrite and redirect the asset URLs to point to the CDN instead. The overhead of the 303 redirects would be ok.

This is the final haproxy config:

global

maxconn 200000

daemon

nbproc 4

stats socket /tmp/haproxy

defaults

mode http

retries 3

option redispatch

maxconn 20000

timeout connect 5000

timeout client 50000

timeout server 50000

log global

option dontlog-normal

option tcplog

option forwardfor

option http-server-close

frontend http-in

mode http

option httplog

option http-server-close

option httpclose

bind :::80 v4v6

redirect scheme https code 301 if !{ ssl_fc }

frontend https-in

option http-server-close

option httpclose

rspadd Strict-Transport-Security:\ max-age=31536000;\ includeSubDomains;\ preload

rspadd X-Frame-Options:\ DENY

reqadd X-Forwarded-Proto:\ https if { ssl_fc }

bind :::443 v4v6 ssl crt /usr/local/etc/ssl/haproxy.pem ciphers AES128+EECDH:AES128+EDH force-tlsv12 no-sslv3

acl video path_beg /sites/default/files/videos/

acl images path_beg /sites/default/files/styles/

http-request redirect code 303 location https://d37nu8xxtvb77.cloudfront.net%[url,regsub(/sites/default/files/videos,,)] if video

http-request redirect code 303 location https://d37nu8xxtvb77.cloudfront.net%[url,regsub(/sites/default/files,,)] if images

default_backend popular_website_cache

backend popular_website_cache

server varnish 127.0.0.1:8080

listen stats

bind :1984

stats enable

stats hide-version

stats realm Haproxy\ Statistics

stats uri /

stats auth stats:mysupersecretpasswordThe benefit of this is also increased introspection as varnish and haproxy each have their own elaborate statistics reporting. Spotting errors and problems becomes very easy as well as confirming that everything works.

The last piece of the puzzle was to configure AWS CloudFront properly as I have never done this before. It is worth mentioning though, that if you’re not sure if you want this permanently, CloudFront is the most unbureaucratic way of setting up a CDN. Most other will bug you with sales droids requesting lengthy calls for potential upsales and signing long term contracts. With AWS you can just log in with your amazon account, set things up and use them as long as you need them. No strings attached.

As a last preparation step I reduced the TTL of the DNS records to the lowest setting which was 5 minutes at Hosteurope so that in case, something goes wrong, I can switch back and forth rather quickly.

Then it was time for the broadcast and this time the traffic surge was handled with ease. Instead of breaking down and being unreachable, ~15k users and ~700k requests within 1-2 hours were served. Clouldfront served about ~48GB of assets while my server delivered ~2GB cached HTML and some JS and CSS which was too cumbersome to extract to the CDN.

This is of course a temporary setup but it worked and solved the problem with about half a day of work. All humming and buzzing in a FreeBSD Jail without any docker voodoo involved. Made me feel a little bit like the internet version of Mr. Wolf.

What we learned from all the statistics of this experiment is that it is most likely that Hosteurope does not provide enough bandwidth for the webspace to host all those large assets and that it would be wise to move them to a CDN either way which then would require the manual labour of changing all the links in the CMS.

Until the transition is made, I’ll keep my setup on stand-by. Either way I hope this is helpful for other people searching for solutions for similar problems.

Lastly I want to address the common question of why I’m not just using nginx for everything that haproxy and varnish are doing?

The answer is that while nginx can indeed do everything, it isn’t great at caching, ssl termination and load balancing. Both, haproxy as well as varnish are highly specialised and optimised tools that provide fine grained control, high performance and as mentioned above, in-depth statistics and debug information which nginx is not providing at all.

To me it’s like having a Multitool instead of having dedicated tools for specific jobs. Sure you can cut, screw and pinch with a multitool, but doing those things with dedicated tools will be more satisfying and provide you with more control and potentially better results.

I am not quite sure, what you need varnish and haproxy for in your setup. You can do the caching part as well as the rewriting/redirecting directly in nginx with just a few lines of config. See proxy_cache and redirect directives.

Would maybe make the setup easier to maintain, less potential points of failures and less internal connections thus reducing RTT.

I’m aware that nginx can do all of that but it isn’t great at either one of those things. haproxy as well as varnish are specialized for their jobs and do them exceptionally well. Plus they provide in depth statistics of what is going on. Something nginx does not do either.

if you’re lazy -> instead of cloudfront/s3 you could simply use cloudflare, they take care of extracting all assets and delivering them via their cdn. you can even drop haproxy, they do offer free certs for SSL

I spoke to them first but immediately had to deal with sales droids contacting me. I had some questions they couldn’t answer and since I didn’t have experience setting that up, I went with the more known route where I knew I also have to billing strings attached. I even called their support hotline to ask whether certain things were possible to set up with Cloudflare and they couldn’t give me a straight answer. Was quite disappointing.

ok, just realized it’s not a personal website. so it won’t be free

yup – where AWS gives you 50GB per month for free – if i understood it correctly. Will see the bill by the end of the month 🙂

nevertheless i can really recommend them, works like a a charm with a few minutes to setup. there’s no traffic cap, so the base level is still reasonably priced I think. you should give it a try with the free version, the 3 included page rules should suffice to drop varnish and strip cookies. switch to pro if all works out.

I meant the basic package of course, not their pro

cloudflare has min ttl of 60 or 120, and just dns is free iirc.

a bit better to quickly failover